Flash Wars: Where could an autonomous weapons revolution lead us?

Making it harder to attribute an attack to a perpetrator will have repercussions beyond non-state use

This week, the High Contracting Parties to the Convention on Certain Conventional Weapons meet in Geneva. One item on the agenda is lethal autonomous weapons. Lethal autonomous weapons – LAWS – are weapons able to carry out a military full targeting cycle in a military operation without human intervention. They are systems equipped with sensors, which in most cases rely on artificial intelligence to take decisions fast and without human involvement.

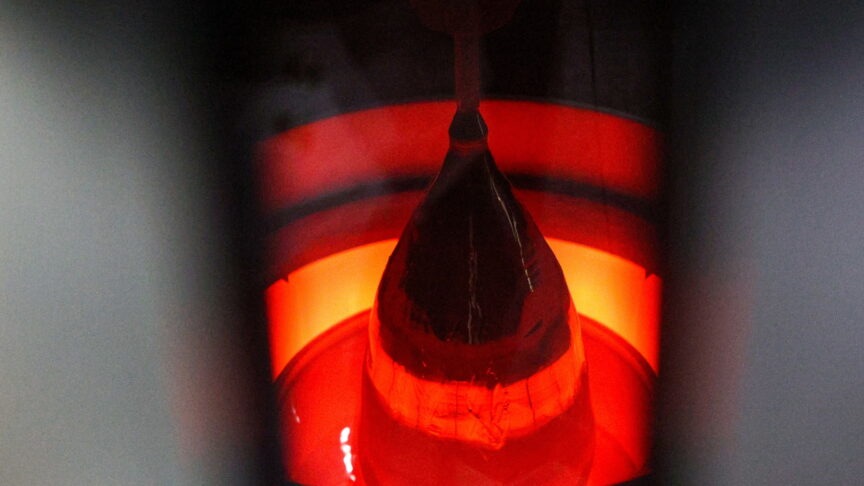

Although they invoke images of science fiction, weapon systems already exist that at least partly fit this definition. For instance, air defence systems such as PATRIOT detect, track, and shoot down incoming missiles or rockets. They do so without direct human intervention, as no human would be fast enough to give the order for each interception. Similarly, Israel’s Harpy system – a type of ‘kamikaze drone’ – can loiter in the air until it detects radar emissions. It then destroys the radar by slamming itself into it, without needing a specific human order.

What might a world, in which lethal autonomous systems are in widespread use, look like? The potentially revolutionary impact could affect the military sphere, politics, and society.

Militarily, faster operations would be one defining characteristic of this new world. A computer is able to digest large amounts of data much more quickly than a human can, and it can take decisions within milliseconds. With LAWS, warfare could speed up at a scale faster than humans can comprehend.

This is even more the case in cyber space, where there are no physical limitations to slow things down. Automated cyber wars could soon involve autonomously attacking and self-replicating cyber weapons. This speeding-up of operations could also lead to undesired chain reactions, known in the world of automated finance as “flash crashes”. These happen when one automated system reacts to another, which causes a third to react, and so on. With LAWS, “flash crashes” could turn into “flash wars”.

New ways of conducting military operations are on the horizon, and a new arms race is likely. But European countries remain divided over how to agree common rules on autonomous weapons

If the hopes of some developers become true, more precise operations, causing fewer civilian casualties, may become possible. This is again due to machines’ ability to process more information, thus decreasing the likelihood of mistakes. Further, as machines lack emotions, they also will not decide to commit war crimes out of hate, and, as there is no ‘heat’ of battle for machines, they will not make mistakes caused by emotions. Finally, some particularly optimistic developers suggest that it may even be possible to programme laws of war into autonomous systems, such as rules of engagement, humanitarian laws, or proportionality. However, optimism on this front has faded in recent years.

That said, systems that use artificial intelligence are not programmed in the classical sense but ‘learn’ through different machine-learning processes. This creates the possibility for unpredictable mistakes. Equally, AI can be biased if the data on the basis of which it has learned is also biased – a common problem in any kind of human-made data.

Furthermore, LAWS can provide new ways of conducting military operations. Most interesting in this context are ‘swarms’, made up, for instance, of hundreds of autonomous drones. Swarms could allow for military operations involving waves of attacks with the systems in a swarm attacking one after the other to break through enemy defences. Another way to use swarms of autonomous systems is to form “kill nets” or “kill webs”, flying minefields which allow the complete control of an enemy territory.

Politically, the use of these systems by non-state actors is concerning. Non-state groups, increasingly important in global relations and conflict, are of smaller size and have smaller bureaucracies, and are thus often able to quickly adopt new technologies. Such groups have found innovative uses for drones in recent years, from propaganda to armed attacks. They may be similarly innovative with artificial intelligence and autonomous systems such as potentially using facial recognition for attacks or simply faster, less traceable attacks. Non-state groups are unlikely to acquire state-built military-grade LAWS, but they can gain access to dual-use technology.

Making it harder to attribute an attack to a perpetrator will have repercussions beyond non-state use. The number of attacks whose perpetrators were difficult or time-intensive to determine has already grown in recent years. With, for instance, no pilot present to help determine an attacker’s nationality or ideology, this will become an even bigger challenge.

In some ways the most important changes are likely to be within society itself. LAWS present unique ethical challenges around the question of humans giving up responsibility for killing by fully transferring decisions over life and death to machines. While for centuries now, previous technological advances have gradually increased the distance between humans and the act of killing, LAWS for the first time allow a complete removal of the human from decision-making. Ethicists have argued that machines are unable to appreciate the value of a human life and the significance of its loss; allowing them to kill human beings would violate the principles of humanity. For example, as political scientist Frank Sauer notes, “a society that no longer puts the strain of killing in war on its collective human conscience, risks nothing less than the abandonment of one of the most fundamental civilisatory values and humanitarian principles”.

As a result, states around the world are currently discussing ways to regulate LAWS. Proposals range from national rules about keeping humans “in the loop” or guaranteeing “meaningful human control”, to calls for international bans on LAWS. What types of regulation and norms will emerge depends partly on public opinion but mostly on the willingness of states to limit their own options. Current debates at the Convention on Certain Conventional Weapons (CCW) United Nations forum, however, suggest that this willingness is limited. But even if there an agreement not to develop and use lethal autonomous weapons does materialise, the risk of an arms race will remain. The speed of operation offered by LAWS means that one actor employing them will lead to others feeling they have to follow. An arms race can then easily develop with even states reluctant to use LAWS caught in an escalatory logic driving them to employ autonomous weapons.

The interest in LAWS in Europe is high, but views are diverse, ranging from states advocating a ban, to states funding the manufacturing of highly autonomous demonstrator systems. The British Taranis drone, as well as the French nEUROn (a project also involving companies from Sweden, Greece, Switzerland, Spain, and Italy), are highly autonomous proofs of concept which may feed into a pan-European system. For now, neither BAE or Dassault are officially planning for Taranis or nEUROn to be able to engage targets without human intervention. But, given their ability to autonomously detect targets, in technical terms this next step would be an easy one.

European Union countries do not have a common position on whether or how to regulate LAWS. This is despite the fact that the EU was has been represented at the CCW discussions in Geneva, and the European Parliament recently agreed a resolution calling for an international ban on LAWS. At the last CCW discussion in September 2018, EU member states did not take a common position. Austria led a group of countries calling for a full ban, but France and Germany argued for a midway political compromise agreement. Given the danger of an escalatory arms race, international coordination is crucial and necessary. Europe could and should lead by example and show ways of open communication and coordination.

Most importantly, given the fundamental ethical challenges the use of autonomous weapons poses, Europe should encourage a public discussion of the topic. If the most revolutionary changes caused by LAWS are societal, all of society should be involved in agreeing the answers. In this area, the liberal democracies of Europe should lead the way to an informed and balanced public debate. The increased interest in, and planned provision of, funding for European research on defence should therefore also include sufficient provision for social sciences research and public information on these crucially important matters.

The European Council on Foreign Relations does not take collective positions. ECFR publications only represent the views of their individual authors.